GPU vs CPU Rendering Which is Better for Your 3D Projects?

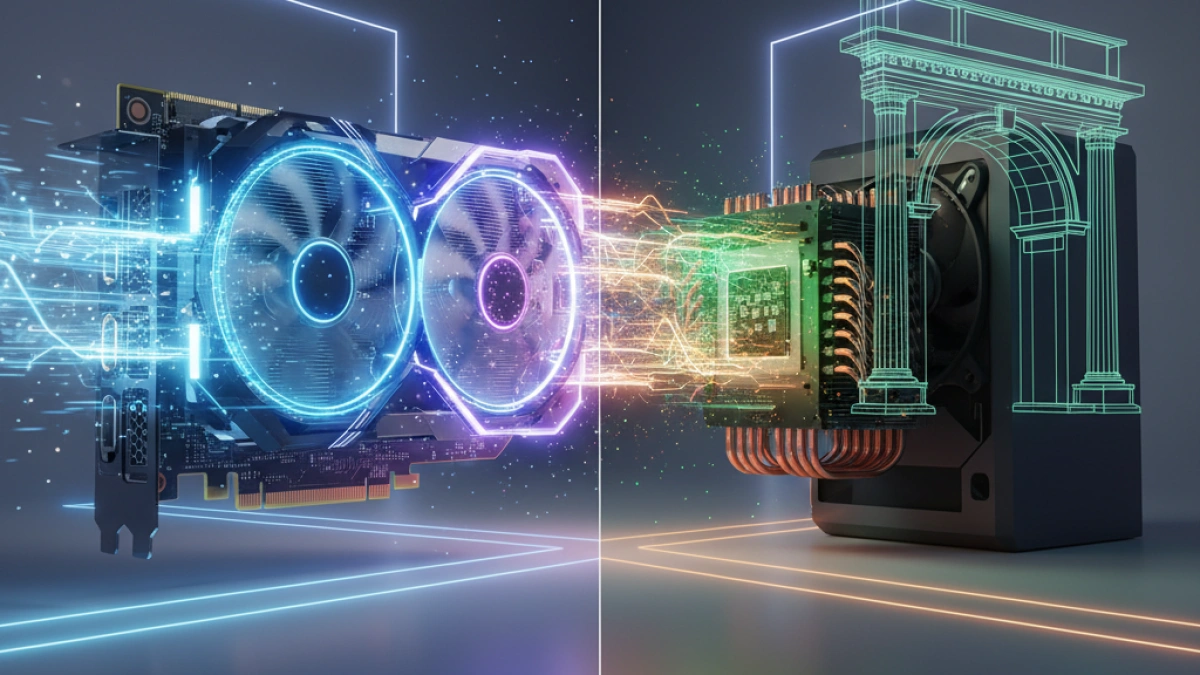

Rendering is one of the most crucial stages in the development of 3D projects, whether in the creation of video games, animations, or architectural visualizations. As technology advances, two rendering methods have stood out: GPU rendering and CPU rendering. In this article, we will explore the differences between the two, their advantages and disadvantages, and help you determine which option is best for your needs.

What is CPU Rendering?

CPU (Central Processing Unit) rendering uses the main processor of the computer to perform the complex calculations necessary to generate images. This method has been the industry standard for many years.

Advantages of CPU Rendering

- Precision and Quality: CPUs often handle complex operations more accurately, which can result in high-quality rendering.

- Compatibility: Most rendering software packages, like Blender and 3ds Max, are compatible with CPU-based rendering methods.

- Efficiency in Specific Tasks: For projects requiring complex calculations, such as physical or fluid simulations, CPU rendering can be more efficient.

Disadvantages of CPU Rendering

- Speed: CPUs are generally slower compared to GPUs, especially in parallel rendering tasks.

- Cost: High-performance CPUs can be expensive, and multi-core setups can significantly increase costs.

What is GPU Rendering?

GPU (Graphics Processing Unit) rendering uses the computer’s graphics card to perform rendering calculations. This method has gained popularity in recent years due to its speed and efficiency.

Advantages of GPU Rendering

- Speed: GPUs are designed to perform multiple calculations simultaneously, making them much faster for rendering tasks.

- Cost-Effectiveness: Modern graphics cards offer great power at a relatively lower cost compared to high-performance CPUs.

- Real-Time Rendering: Many design applications are now integrating real-time rendering capabilities, allowing for instant visibility of changes.

Disadvantages of GPU Rendering

- Memory Limitations: GPUs have a limit on the amount of memory they can use, which can be a constraint for larger projects.

- Less Precision in Complex Calculations: While they are fast, GPUs can struggle with certain complex simulations that require high precision.

Comparison Between GPU and CPU Rendering

Feature GPU CPU

| Speed | High | Medium

| Precision | Medium | High

| Cost | Lower for high performance | Higher for high performance

| Memory | Limited | More extensive

| Ideal for | Real-time projects | Projects with complex simulations

What is the Best Option for Your 3D Projects?

The choice between CPU and GPU rendering depends on several factors:

1. Project Type

- Small and Medium Projects: If you plan to work on smaller 3D projects, GPU rendering may be sufficient and provide you with a faster experience.

- Large and Complex Projects: For projects involving complex physical simulations or requiring a high level of detail, CPU rendering may be the better option.

2. Budget

- Limited: If your budget is tight, consider investing in a good GPU rather than spending on an extreme-performance CPU.

- Long-Term Investment: If your work heavily depends on rendering and you can invest more, a setup that includes both technologies may be ideal.

3. Software Used

Some applications are more optimized for one of the two methods. Research the specifications of the software you plan to use to ensure you choose the best technology for your needs.

Read also

Conclusion

Both GPU and CPU rendering have their pros and cons. The key is to evaluate your specific needs, the type of project you will undertake, your budget, and the software you will use. A combination of both technologies often provides the best performance and quality.

While technological advances continue, being informed about the capabilities and limitations of each rendering method will allow you to make an informed choice that will benefit your 3D projects in the long run.